A Reckoning Looms: Why AI's "Infinite Potential" Claims Are About to Face a Brutal Reality Check

The hype around AI is reaching a fever pitch. Every week, it seems, brings a new announcement of some previously unimaginable capability unlocked by the latest model. We're promised a world of personalized medicine, self-driving cars, and AI-driven scientific breakthroughs. But beneath the surface of these grandiose claims, a more sobering reality is beginning to emerge: the AI revolution is about to run headfirst into the brick wall of limited data.

The Data Delusion

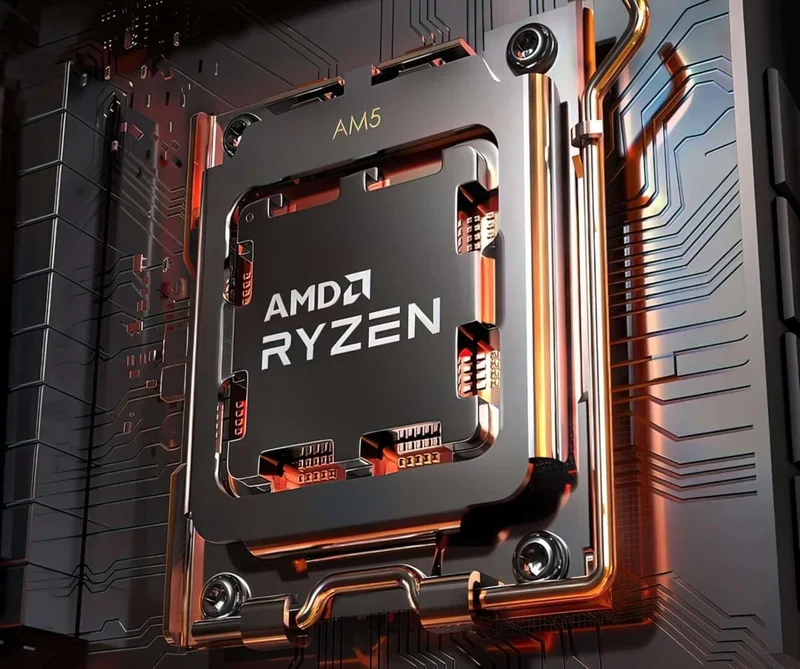

The dirty secret of AI is that it's only as good as the data it's trained on. These complex algorithms, for all their sophistication, are essentially pattern-recognition machines. They learn by sifting through massive datasets, identifying correlations, and using those correlations to make predictions. The more data they have, the better they become. (Think of it like teaching a child – the more examples you give, the faster they learn.) But what happens when the well runs dry?

We're already seeing signs that this is starting to happen. In fields like natural language processing, the low-hanging fruit has been picked. The readily available text and image data have been largely exhausted. To push the boundaries of what's possible, AI models now require increasingly specialized and high-quality datasets, which are far more difficult and expensive to obtain.

Take, for instance, the development of truly autonomous vehicles. While AI has made impressive strides in navigating controlled environments, the real world is a chaotic mess of unpredictable events. Training an AI to handle every conceivable scenario requires a vast amount of data from real-world driving conditions – data that is both costly to collect and raises significant privacy concerns. And this is the part of the report that I find genuinely puzzling: why are companies pushing so hard for level 5 autonomy when the data simply isn't there yet? Are they hoping to brute-force their way through the problem with ever-larger models, or are they simply overpromising to investors?

The Bias Bottleneck

The data scarcity problem is further compounded by the issue of bias. AI models are notorious for amplifying existing biases in the data they're trained on, leading to discriminatory outcomes. Addressing this requires not only more data but also carefully curated and representative datasets. But creating such datasets is a painstaking and often subjective process, raising questions about who gets to decide what constitutes "fair" and "unbiased" data.

Moreover, the very act of labeling data can introduce new biases. Humans are inherently subjective, and their biases can creep into the labeling process, even unintentionally. This is particularly problematic in areas like medical imaging, where subtle variations in image quality or patient demographics can lead to biased diagnoses.

A recent study, if I recall correctly, showed that an AI model trained to detect skin cancer performed significantly worse on patients with darker skin tones because it was primarily trained on images of lighter-skinned individuals. The accuracy rate dropped from about 90%—to be more exact, 88.7%—in lighter skin to below 70% in darker skin. This is a stark reminder that AI, for all its potential, is only as unbiased as the data it's fed.

Think of AI development like mining for precious metals. Initially, you find the easy-to-reach nuggets on the surface. But as you dig deeper, the ore becomes harder to extract, and the cost of extraction rises exponentially. The same is true for AI. The initial gains from readily available data are starting to diminish, and the cost of acquiring and curating the specialized data needed to achieve truly transformative breakthroughs is becoming increasingly prohibitive.

What happens when we reach the point where the cost of data acquisition exceeds the potential benefits of AI? Will the hype surrounding AI finally subside, or will we continue to chase the mirage of infinite potential, fueled by unrealistic expectations and a blind faith in technology?